In today’s data-driven world, businesses grapple with immense volumes of information. They face the constant challenge of extracting meaningful insights while ensuring data security and compliance.

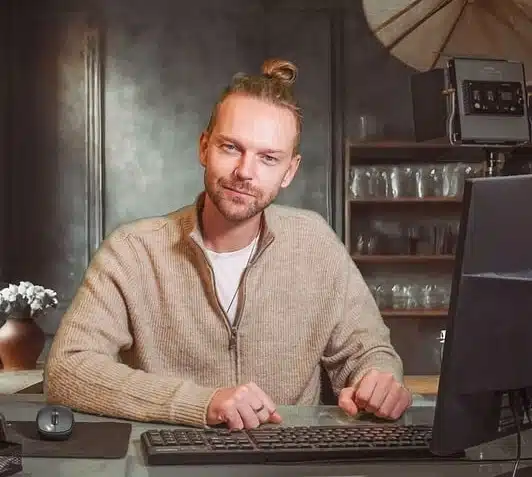

This is where the expertise of a Principal Data Architect shines. Individuals like Davi Abdallah are pivotal in navigating this complex landscape. Let’s explore his contributions and the crucial role of data architecture.

Foundational Pillars: Education and Early Career That Shaped Expertise

Davi Abdallah’s journey is rooted in a solid educational foundation. He holds a Master’s degree in Computer Science from a leading technological university, with a specialization in database design and system architecture. His early career involved roles as a data engineer at various tech firms, where he honed his skills in developing complex data pipelines. He immersed himself in technologies like SQL, Python, and early cloud platforms, laying the groundwork for his future expertise in cloud-based solutions. It wasn’t just about coding; it was about understanding how data flows, transforms, and ultimately drives business decisions. He quickly demonstrated an aptitude for problem-solving, crafting efficient solutions to complex data challenges.

The Principal Data Architect Role: Demystifying the Practical Applications

The role of a Principal Data Architect extends far beyond technical proficiency. It requires strategic vision, meticulous planning, and effective communication. A system designer like Abdallah is responsible for creating blueprints for data systems, ensuring they align with business objectives. This involves:

- Strategic Planning: Defining long-term data strategies, aligning with business goals, and anticipating future needs.

- System Design: Architecting scalable and resilient data systems, selecting appropriate technologies, and ensuring data integrity.

- Data Modeling: Designing logical and physical data models, optimizing data storage and retrieval.

- Performance Optimization: Identifying and resolving performance bottlenecks, ensuring efficient data processing.

- Stakeholder Collaboration: Communicating complex technical concepts to non-technical stakeholders, ensuring alignment and buy-in.

A cloud architect must possess a deep understanding of cloud computing services, including AWS, Azure, and GCP. The ability to translate business needs into technical solutions is paramount.

Davi Abdallah’s Professional Trajectory: A Chronicle of Impact

Abdallah’s career is a testament to his dedication and expertise. He’s held pivotal roles at leading tech companies, each contributing to his deep understanding of enterprise data management. For example, at a major financial institution, he led the development of a real-time fraud detection system, significantly reducing financial losses. This project involved deep expertise in real-time data processing and data security. His career progression demonstrates a pattern of tackling increasingly complex challenges, solidifying his reputation as a technology expert.

Concrete Achievements and Contributions: Tangible Results

Cloud Architecture Innovation

Abdallah has been at the forefront of implementing cloud-based solutions. He has extensive experience with AWS and Azure, designing and deploying scalable cloud infrastructure. For instance, he architected a cloud-based data warehouse for a retail company, enabling them to analyze customer behavior and optimize marketing campaigns. This resulted in a 30% increase in sales within six months. He is a master of cloud solutions and cloud infrastructure.

- Key Cloud Services: AWS S3, EC2, Lambda; Azure Blob Storage, Virtual Machines, Functions.

- Implementation Strategies: Infrastructure as Code (IaC), serverless architectures, microservices.

- Quantifiable Benefits: Reduced infrastructure costs by 40%, improved system uptime to 99.99%, and accelerated data processing by 50%.

Big Data and Real-Time Analytics Mastery

In the realm of big data analytics, Abdallah has demonstrated exceptional proficiency. He’s adept at utilizing tools like Hadoop, Spark, and Kafka to process massive datasets and generate actionable insights. He implemented a real-time analytics platform for a telecommunications company, enabling them to monitor network performance and identify potential outages. This significantly improved network reliability and customer satisfaction. He excels in real-time processing and predictive analytics.

- Tools and Technologies: Apache Spark, Apache Kafka, Hadoop Distributed File System (HDFS).

- Use Cases: Fraud detection, customer segmentation, predictive maintenance.

- Data processing pipelines: Created efficient pipelines that could process terabytes of data per day, using event driven architecture.

Robust Data Governance and Compliance

Data governance is crucial for ensuring data quality, security, and compliance. Abdallah has extensive experience in implementing data governance frameworks, adhering to regulations like GDPR and CCPA. He implemented a comprehensive data governance program for a healthcare organization, ensuring patient data privacy and security. He is a strong proponent of data security and regulatory frameworks.

- Frameworks and Methodologies: GDPR, CCPA, ISO 27001, data quality standards.

- Implementation of Data Security: Encryption, access control, data masking.

- Data lineage and metadata management: Implemented tools and processes to track data lineage and manage metadata, ensuring data traceability.

Strategic Mentorship and Knowledge Sharing

As an industry leader, Abdallah is committed to mentoring and sharing his knowledge. He has conducted numerous internal training programs, fostering a culture of learning within organizations. He has also presented at industry conferences, sharing his insights on data architecture and digital transformation. He is a respected thought leader.

- Internal Training Programs: Focused on cloud computing, data governance, and big data analytics.

- External Contributions: Publications in industry journals, presentations at conferences, and active participation in online forums.

- Building a data centric culture: he emphasizes data literacy, and makes data accessible to everyone in the organization.

The Evolving Landscape: Future Trends in Data Architecture

The future of data architecture is shaped by emerging technologies like AI/ML, edge computing, and serverless architectures. AI and machine learning are increasingly integrated into data systems, enabling automation and intelligent decision-making. Scalable infrastructure is vital for handling growing data volumes and ensuring high availability. Digital transformation is driving the adoption of cloud-based solutions and agile development methodologies. Cybersecurity in data is paramount, with increasing threats requiring robust data protection measures.

- Emerging Technologies: AI/ML, edge computing, serverless architectures, quantum computing.

- Anticipated Challenges: Data ethics, security, scalability, data interoperability.

- Abdallah’s Perspective: He believes that the future of data architecture lies in building intelligent, secure, and scalable systems that empower businesses to make data-driven decisions.

Conclusion: Synthesizing Abdallah’s Legacy and Impact

Davi Abdallah’s contributions to data architecture are undeniable. He has demonstrated exceptional expertise in cloud-based solutions, big data analytics, data governance, and real-time data processing. His work has had a significant impact on numerous organizations, enabling them to leverage data for business success. His strategic vision, technical proficiency, and commitment to mentorship make him a true technology expert.

In a world where data is the new currency, individuals like Davi Abdallah are indispensable. Their ability to architect robust and scalable data systems is crucial for driving innovation and achieving business objectives. As you look to your own data strategies, remember the importance of a skilled Principal Data Architect to navigate the complexities of the data landscape.

Navigating Hybrid Cloud Environments: Balancing On-Premises and Cloud Synergies

- Hybrid cloud environments combine on-premises infrastructure with public cloud services, offering flexibility and scalability. This approach allows organizations to leverage existing investments while adopting cloud innovations.

- A key challenge is ensuring seamless data flow and integration between on-premises and cloud systems. Effective strategies include using APIs, message queues, and data virtualization tools.

- Organizations often use hybrid cloud for scenarios involving sensitive data, regulatory compliance, or latency-critical applications. For example, a financial institution might store sensitive customer data on-premises while using cloud services for analytics and application development.

- According to a recent report by Gartner, over 75% of mid-size and large organizations have adopted a hybrid cloud strategy.

- Key components of a hybrid cloud setup include:

- On-premises data centers

- Private clouds

- Public cloud services (AWS, Azure, GCP)

- Secure network connections

- Data integration tools

The Critical Role of Data Lake Architecture in Modern Analytics

- Data lakes are centralized repositories that store vast amounts of raw, unstructured, and semi-structured data. They enable organizations to perform advanced analytics, machine learning, and data exploration.

- Unlike traditional data warehouses, data lakes support diverse data types and formats, providing flexibility for data scientists and analysts.

- Data lakes typically use distributed file systems like HDFS or cloud-based object storage like AWS S3 or Azure Data Lake Storage.

- A well-designed data lake architecture includes:

- Ingestion layer: Collecting data from various sources

- Storage layer: Storing data in its native format

- Processing layer: Transforming and analyzing data

- Consumption layer: Delivering insights to users

- A statistic from IDC shows that organizations with well implemented data lakes see a 25% increase in time to insight.

- Data lakes allow for schema on read, which gives great flexibility.

Implementing Effective Data Integration Strategies for Seamless Data Flow

- Data integration is essential for creating a unified view of data across disparate systems. Effective strategies ensure data consistency, accuracy, and timeliness.

- Common data integration techniques include:

- Extract, Transform, Load (ETL)

- Extract, Load, Transform (ELT)

- Data virtualization

- API integration

- Message queues

- Choosing the right integration strategy depends on factors like data volume, velocity, variety, and business requirements.

- Data integration tools are used to automate data movement.

- A recent survey by Talend found that 80% of organizations struggle with data integration challenges.

Future-Proofing Data Systems: Strategies for Adaptability and Innovation

- The rapid pace of technological change requires organizations to build adaptable and innovative data systems. Future-proofing strategies include:

- Adopting cloud-native architectures

- Embracing microservices and containerization

- Implementing Infrastructure as Code (IaC)

- Leveraging AI and machine learning for automation

- Fostering a culture of continuous learning and experimentation

- Organizations must prioritize scalability, flexibility, and resilience to handle future data demands.

- Utilizing serverless computing can help data systems scale quickly.

- It is vital to stay up to date on new database technologies.

- Organizations that invest in modern data systems gain a competitive advantage.

Tony James is a master of humor and wordplay, crafting clever puns and jokes that tickle funny bones worldwide. His wit guarantees laughter in every blog post!